Hello All,

Today, I would like to talk about a monitoring tool for physical disks (iostat). It is a very great tool if you want to watch and improve your disk's performance. It monitors system input/output device loading by observing the time the devices are active in relation to their average transfer rates. Therefore, it can be used to change system configuration to better balance the input/output load between physical disks.

iostat

To find out which package contains iostat utility, run the below command:

yum whatprovides "*/iostat"

Figure 1

Install iostat:

yum install sysstat

Running iostat without any parameters will make a report at the time of running command (just one instance):

Figure 2

It generates two types of reports, the Cpu Utilization report and the Device or Disk Utilization report.

According to man page,

%user : Show the percentage of CPU utilization that occurred while executing at the user level (application).

%nice : Show the percentage of CPU utilization that occurred while executing at the user level with nice priority.

%system : Show the percentage of CPU utilization that occurred while executing at the system level (kernel).

%iowait : Show the percentage of time that the CPU or CPUs were idle during which the system had an outstanding disk I/O request.

%steal : Show the percentage of time spent in involuntary wait by the virtual CPU or CPUs while the hypervisor was servicing another virtual processor.

%idle : Show the percentage of time that the CPU or CPUs were idle and the system did not have an outstanding disk I/O request.

tps : Indicate the number of transfers per second that were issued to the device.

kB_read/s : Indicate the amount of data read from the device expressed in kilobytes per second.

kB_wrtn/s : Indicate the amount of data written to the device expressed in kilobytes per second.

kB_read : The total number of blocks read.

kB_wrtn : The total number of blocks written.

If you want to make a report just for Cpu utilization, use "-c" parameters OR use just "-d" for Device Utilization:

iostat -c

iostat -d

To display statistics in megabytes per second use "-m":

Figure 3

To run iostat command continuously, we need an interval parameter without any count parameter. For example, to run iostat each every 3 senonds continuously, we need the below command:

iostat -d 3

Figure 4

To run iostat command by specifying the amount of time in seconds between each report and stops after some number of counts, we need a count paramater after interval parameter. For example, to generate a report running in each 3 seconds and stops after 2 counts, we need the below command:

iostat -d 3 2

Figure 5

Use "-x" parameter to extend the report for more statistics:

iostat -x

Figure 6

If you need statistics just for one specific disk, for example dm-0, run the below command:

iostat -x dm-0

Figure 7

Disadvantage of iostat command is that you can't find out which process or application is acting up or causing issues on that particular device if there is. In next part, I will talk about iotop command to address this.

Hope you enjoyed,

Khosro Taraghi

Sunday, November 30, 2014

Thursday, October 30, 2014

Stress Command

Hello all,

Today, I am going to talk about Stress command. It's a very useful and easy command if you want to impose load and stress on your systems/servers and then review the result of running your application or whatever it is under pressure. For example, if you want to review your application's performance or your website's performance under a busy server, you can use this command; let's say you know your web server is working fine with 100 customers connected at the same time to your web server in a regular business day, which is usually the case in your company, and it uses 30% of your server's cpu roughly, but how is your web server's performance working when your company has a good sale or it's close to the end of year, boxing day for instance? And you know that your connected customers to your web server would increase by 50% at that moment. It's too late if you find out that your application is poor in memory managment or has a memory leak or whatever reason on that day and your website goes down under heavy loads and whatelse, your company will lose money. So, you can predict/pervent that situation by increasing cpu and memory using this command and simulate the exact situation and then figure out the problem in your application or servers.

Here is how it works:

First you need to install stress command:

yum install stress

By the way, you can stress your system by cpu, memory, io, hdd, or a combination of them. You can get a list of options and parameters in manpage as well.

Example 1:

Bring the system load average up to an arbitrary value for cpu. In this example, it forks 4 processes with calculating the sqrt() of a random number acquired with rand() function.

stress -c 4

or

strss --cpu 4 --timeout 15

"--timeout 15" means timeout after 15 seconds

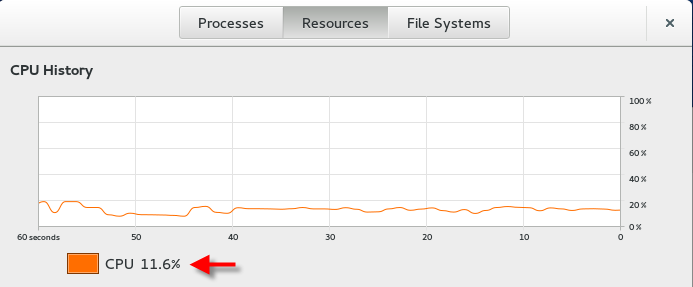

Figure 1 (before running command)

Figure 2 (after running command)

Example 2:

To see how your system performs when it is I/O bound, use the -i switch.

stress -i 4

or

stress --io 4

This will call sync() which is a system call that flushes memory buffers to disk.

Of course, you can combine above commands like:

stress -c 4 -i 4 --verbose --timeout 15

Example 3:

Now, let's try memory stress. The following command forks 7 processes each spinning on malloc():

stress -m 7 --timeout 15

or

stress --vm 7 --timeout 15

Figure 3 (before running command)

Figure 4 (after running command)

According to manpage, we can use --vm-hang option which instructs each vm hog process to go to sleep after allocating memory. This contrasts with their normal behavior, which is to free the memory and reallocate it. This is useful for simulating low memory conditions on a machine. For example, the following command allocates 512M (2 x 256M) of RAM and holds it until killed (after 10 seconds).

stress --vm 2 --vm-bytes 128M --vm-hang 10

Figure 5

And here is what manpage says about stress command:

'stress' is not a benchmark, but is rather a tool designed to put given subsytems under a specified load. Instances in which this is useful include those in which a system administrator wishes to perform tuning activities, a kernel or libc programmer wishes to evaluate denial of service possibilities, etc.

And pretty much that's it. I hope you enjoyed. Don't forget to put your comments here.

Thanks all,

Khosro Taraghi

Today, I am going to talk about Stress command. It's a very useful and easy command if you want to impose load and stress on your systems/servers and then review the result of running your application or whatever it is under pressure. For example, if you want to review your application's performance or your website's performance under a busy server, you can use this command; let's say you know your web server is working fine with 100 customers connected at the same time to your web server in a regular business day, which is usually the case in your company, and it uses 30% of your server's cpu roughly, but how is your web server's performance working when your company has a good sale or it's close to the end of year, boxing day for instance? And you know that your connected customers to your web server would increase by 50% at that moment. It's too late if you find out that your application is poor in memory managment or has a memory leak or whatever reason on that day and your website goes down under heavy loads and whatelse, your company will lose money. So, you can predict/pervent that situation by increasing cpu and memory using this command and simulate the exact situation and then figure out the problem in your application or servers.

Here is how it works:

First you need to install stress command:

yum install stress

By the way, you can stress your system by cpu, memory, io, hdd, or a combination of them. You can get a list of options and parameters in manpage as well.

Example 1:

Bring the system load average up to an arbitrary value for cpu. In this example, it forks 4 processes with calculating the sqrt() of a random number acquired with rand() function.

stress -c 4

or

strss --cpu 4 --timeout 15

"--timeout 15" means timeout after 15 seconds

Figure 1 (before running command)

Figure 2 (after running command)

Example 2:

To see how your system performs when it is I/O bound, use the -i switch.

stress -i 4

or

stress --io 4

This will call sync() which is a system call that flushes memory buffers to disk.

Of course, you can combine above commands like:

stress -c 4 -i 4 --verbose --timeout 15

Example 3:

Now, let's try memory stress. The following command forks 7 processes each spinning on malloc():

stress -m 7 --timeout 15

or

stress --vm 7 --timeout 15

Figure 3 (before running command)

Figure 4 (after running command)

According to manpage, we can use --vm-hang option which instructs each vm hog process to go to sleep after allocating memory. This contrasts with their normal behavior, which is to free the memory and reallocate it. This is useful for simulating low memory conditions on a machine. For example, the following command allocates 512M (2 x 256M) of RAM and holds it until killed (after 10 seconds).

stress --vm 2 --vm-bytes 128M --vm-hang 10

Figure 5

And here is what manpage says about stress command:

'stress' is not a benchmark, but is rather a tool designed to put given subsytems under a specified load. Instances in which this is useful include those in which a system administrator wishes to perform tuning activities, a kernel or libc programmer wishes to evaluate denial of service possibilities, etc.

And pretty much that's it. I hope you enjoyed. Don't forget to put your comments here.

Thanks all,

Khosro Taraghi

Monday, September 29, 2014

What Is GlusterFS Filesystem and How Does It Configure In Linux?

Hello everybody,

Today, I would like to talk about GlusterFS

in Linux. Note: It’s Gluster and not Cluster. Glusterfs is a

distributed file system and it’s very flexible. You can claim free spaces on

each server and make it a huge virtual drive for clients. It’s flexible because

you can add/remove servers and make it bigger or smaller size and also you can

configure it on tcp protocol for remote access. It reminds me Cloud or Raid but

much more flexible. It’s fast too and

most importantly, it’s easy administration. It has ability to balance the data

and workload and also high available. It’s really cool. And yes, it’s Open

Source.

Here, I am demonstrating it with 4 CentOS

7.0 machines. 3 servers and 1 client.

At the moment that I am writing this blog,

the latest version is Glusterfs 3.5.2. I am going to download the package and

install it manually, however, you can install it with yum command easily. The

only thing is that yum repository is not up-to-date and you may end up with

lower version of Glusterfs such as 3.4.0 or something like that, but

installation is much easier. I am going to install it manually for

demonstration purposes.

To download and install GlusterFS, run the

following commands as a root user on each server and also client:

wget

http://download.gluster.org/pub/gluster/glusterfs/3.5/3.5.2/CentOS/epel-7Everything/x86_64/glusterfs-3.5.2-1.e17.x86_64.rpm

yum

install gcc git nfs-utils perl rpcbind

yum

install gcc git nfs-utils perl rpcbind

yum

install rsyslog-mmjsonparse

yum

install libibverbs

yum

install librdmacm

rpm

-Uvh glusterfs-3.5.2-1.el7.x86_64.rpm glusterfs-api-3.5.2-1.el7.x86_64.rpm

glusterfs-api-devel-3.5.2-1.el7.x86_64.rpm glusterfs-cli-3.5.2-1.el7.x86_64.rpm

glusterfs-debuginfo-3.5.2-1.el7.x86_64.rpm

glusterfs-devel-3.5.2-1.el7.x86_64.rpm

glusterfs-extra-xlators-3.5.2-1.el7.x86_64.rpm

glusterfs-fuse-3.5.2-1.el7.x86_64.rpm glusterfs-geo-replication-3.5.2-1.el7.x86_64.rpm

glusterfs-libs-3.5.2-1.el7.x86_64.rpm glusterfs-rdma-3.5.2-1.el7.x86_64.rpm

glusterfs-server-3.5.2-1.el7.x86_64.rpm

Figure 1

After completing installation, run the

following command and find out the version. Note: It’s under GNU license:

glusterfs –V

Figure 2

Now, you need to open firewall. Run the

below commands in all machines:

iptables

-I INPUT -m state --state NEW -m tcp -p tcp --dport 24007:24011 -j ACCEPT

iptables

-I INPUT -m state --state NEW -m tcp -p tcp --dport 111 -j ACCEPT

iptables

-I INPUT -m state --state NEW -m udp -p udp --dport 111 -j ACCEPT

If you need to add more servers, you must

add more port to open in firewall for each server. For example, 24012, 24013, …

in this case.

Now, it’s time to start the glusterd

service:

service glusterd start

or

/bin/systemctl start glusterd.service

Figure 3

Now, we need to configure our servers. You

must select one of this servers, doesn’t matter which one, to act as a master

server. The first thing that you need to do is creating a Pool or Storage Pool.

Log in as a root user in master server and run the following commands:

service

glusterd restart

gluster

peer probe 192.168.157.133

gluster

peer probe 192.168.157.134

You must replace the IPs above with your IP

addresses. You don’t need to restart you glusterd service, but if you get

“Connection failed. Please check if gluster daemon is operational” message like

me as showed in figure 4, just restart glusterd service and then you should be

fine.

Figure 4

To see what’s going on, run this command:

gluster

peer status

Figure 5

Now, it’s time to create a volume or a virtual volume in other words. I call it a virtual volume because client only sees one volume or disk drive, however, this volume or disk resides on all servers or 3 servers in this case. Anyway, create an empty directory on each server:

mkdir –p /mnt/storage.132

mkdir –p /mnt/storage.133

mkdir –p /mnt/storage.134

Then, on master server, run the below

command to create a volume:

gluster

volume create MyCorpVol transport tcp 192.168.157.132:/mnt/storage.132

192.168.157.133:/mnt/storage.133 192.168.157.134:/mnt/storage.134 force

I used “force” option because it kept

warning me that it’s not a good idea to install on root directory or get space

from system partition; but since it’s for training purposes, I just force it.

Ideally, you must install it on a separate disk, anything other than system

partition. See Figure 6.

OH! By the way, we have 3 types of volume:

- Distributed volume (command above).

Distributed volume

distributes files to all servers (like Load Balancer) and because it balances

the load, there is no pressure on one server and read/write files are fast.

However, this is no fault tolerance. It means if one of servers goes down, you

are going to lose your part of data that resides on that particular server.

Replicated volume writes

files to all servers (replica) and you have fault tolerance now, however, the

speed of writing files is going to be slow. The good thing is that you can

define peer of replica. For example, if you have 4 servers, you can define 2

replicas. So, in that way, you have both fault tolerance and speed.

3 3.

Distributed Strip volume.

This is going to be very

fast because gluster divides files to equal pieces and writes them to all

servers at the same time. Therefore, it’s so fast. However, there is no fault

tolerance.

Now, it’s time to “start” our created

volume. Run the command below:

gluster

volume start MyCorpVol

To see the volume’s info, run this command:

gluster

volume info

Figure 6

On client side, first we need to mount our

created volume. Run the bellow command to mount it:

mkdir

–p /opt/CorpStorage

mount

–t glusterfs 192.168.157.132:/MyCorpVol /opt/CorpStorage

and yes; of course you can add it to

/etc/fstab to mount it automatically after reboot. Now, let’s try it and see

how it works. I am going to copy some files to mounted folder. Figure 7.

Figure 7

Do you want to know where those files

actually have gone? Look at Figure 8, 9, and 10. Note: In this example, it’s

Distribute Volume. It randomly distributes files. At this example, server 134

is empty, but it may get some files next time as files distributes to all

servers.

Figure 8

Figure 9

Let’s create another volume with replicated

mode. Run the following commands:

gluster

volume create MyCorpVol2 replica 3 transport tcp 192.168.157.132:/var/storage1

192.168.157.133:/var/storage2 192.168.157.134:/var/storage3 force

gluster

volume start MyCorpVol2

gluster

volume info

Figure 11

The same deal here on the client side:

mkdir

–p /opt/CorpStorage2

mount

–t glusterfs 192.168.157.132:/MyCorpVol2 /opt/CorpStorage2

Now, if you copy some files on mounted

directory, you should be able to see all files on all servers because the type

of volume is Replicated Volume. See Figure 12, 13, 14 and 15.

Figure 12

Figure 13

Figure 14

Figure 15

When you want to add more storages (it can

be on the same servers or a new server), you need to add bricks to existing

volume. Run the following commands:

mkdir –p /var/storage4

gluster volume add-brick MyCorpVol2 replica

4 192.168.157.132:/var/storage4 force

Figure 16

To reduce the size of volume, you need to

remove-brick command:

gluster volume remove-brick MyCorpVol2

replica 3 192.168.157.132:/var/storage4 force

Figure 17

In this example, because we are using

Replication Volume, you don’t need to rebalance data and it will copy all data

as soon as next file comes in to all servers. However, if you are using

Distributed or Strip Volume, you need to rebalance data. Let’s try another

example:

In this example, there are 2 Distributed

volumes and I want to add one more volume and then rebalance data on servers.

Run the following commands:

gluster

peer probe 192.168.157.134 ---> to

add new server to storage pool

gluster

volume add-brick MyCorpVol3 192.168.157.134:/var/share3 force

gluster

volume rebalance MyCorpVol3 start

gluster

volume rebalance MyCorpVol3 status

---> to see the status of volume

Figure 19

The same deal here when you want to remove

a brick:

gluster

volume remove-brick MyCorpVol3 192.168.157.134:/var/share3 force

gluster

volume rebalance MyCorpVol3 start

gluster

volume rebalance MyCorpVol3 status

Figure 20

To monitor your volumes and servers run the

below command:

gluster volume profile MyCorpVol3 info

Figure 21

If you want to grant or deny access to a

glusterfs volume for a specific client or subnet, use the following commands

but replace the ip or hostname with your ip or hostname.

gluster volume set MyCorpVol3 auth.allow xxx.xxx.xxx.xxx (IP)

gluster volume set MyCorpVol3 auth.reject

xxx.xxx.xxx.xxx (IP)

Figure 22

And finally, if you want to delete an

existing volume, you must first stop the volume and then delete it:

gluster volume stop MyCorpVol3

gluster volume delete MyCorpVol3

That’s all. I hope you enjoyed of reading this

blog. Don’t forget to put your comments here.

Regards,

Subscribe to:

Comments (Atom)